Lab 9 - Surfaces: Accuracy of DEMs

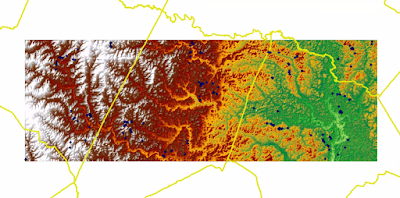

As you might have noticed, we use DEMs a lot in our lab assignments. They are very popular in a wide range of applications in spatial modeling. With recent technological advances in remote sensing, such as LiDAR data (Light Detection and Ranging), elevation models with higher resolutions are becoming more available. But just how accurate are they? In this lab session, we dedicated our time in performing a vertical accuracy assessment of a DEM subset in North Carolina, as well as interpreted the effects of various interpolation methods on DEM accuracy.

The quality of a DEM can be determined in various ways, including: calculating the 68th and 95th percentiles, calculating the Root Mean Square Error (RMSE), and calculating the Mean Error (also known as 'Bias'). Other additional quality observations can be made by visually investigating: the spatial distribution of reference points against a raster; the sampling design or system; and the counts, minimum, and maximum elevation values per land cover type.

Specifically, for our lab we used Spatial Analyst Extraction tools in ArcGIS, as well as a handful of formulas in Excel to derive accuracy metrics for a DEM, while noting any differences between land cover types. My numerical results are shown in the table below.

When measuring error, smaller values are better and/or that values closer to zero are better. Based on the results in the table, it can be noted that Land Cover A (Bare Earth and Low Grass) has the highest RSME accuracy, while Land Cover D (Fully Forested) has the lowest RSME accuracy. However, when introducing bias information, we we are shown that Land Cover D (Fully Forested) has the highest accuracy, while Land Cover E (Urban) has the lowest accuracy. Since we can see both positive and negative values, we know that the LiDAR data has over- and under-estimated the reference values. This is a way for an analyst to calculate whether the accuracy of LiDAR data is biased or not.

The quality of a DEM can be determined in various ways, including: calculating the 68th and 95th percentiles, calculating the Root Mean Square Error (RMSE), and calculating the Mean Error (also known as 'Bias'). Other additional quality observations can be made by visually investigating: the spatial distribution of reference points against a raster; the sampling design or system; and the counts, minimum, and maximum elevation values per land cover type.

Specifically, for our lab we used Spatial Analyst Extraction tools in ArcGIS, as well as a handful of formulas in Excel to derive accuracy metrics for a DEM, while noting any differences between land cover types. My numerical results are shown in the table below.

When measuring error, smaller values are better and/or that values closer to zero are better. Based on the results in the table, it can be noted that Land Cover A (Bare Earth and Low Grass) has the highest RSME accuracy, while Land Cover D (Fully Forested) has the lowest RSME accuracy. However, when introducing bias information, we we are shown that Land Cover D (Fully Forested) has the highest accuracy, while Land Cover E (Urban) has the lowest accuracy. Since we can see both positive and negative values, we know that the LiDAR data has over- and under-estimated the reference values. This is a way for an analyst to calculate whether the accuracy of LiDAR data is biased or not.

Comments

Post a Comment